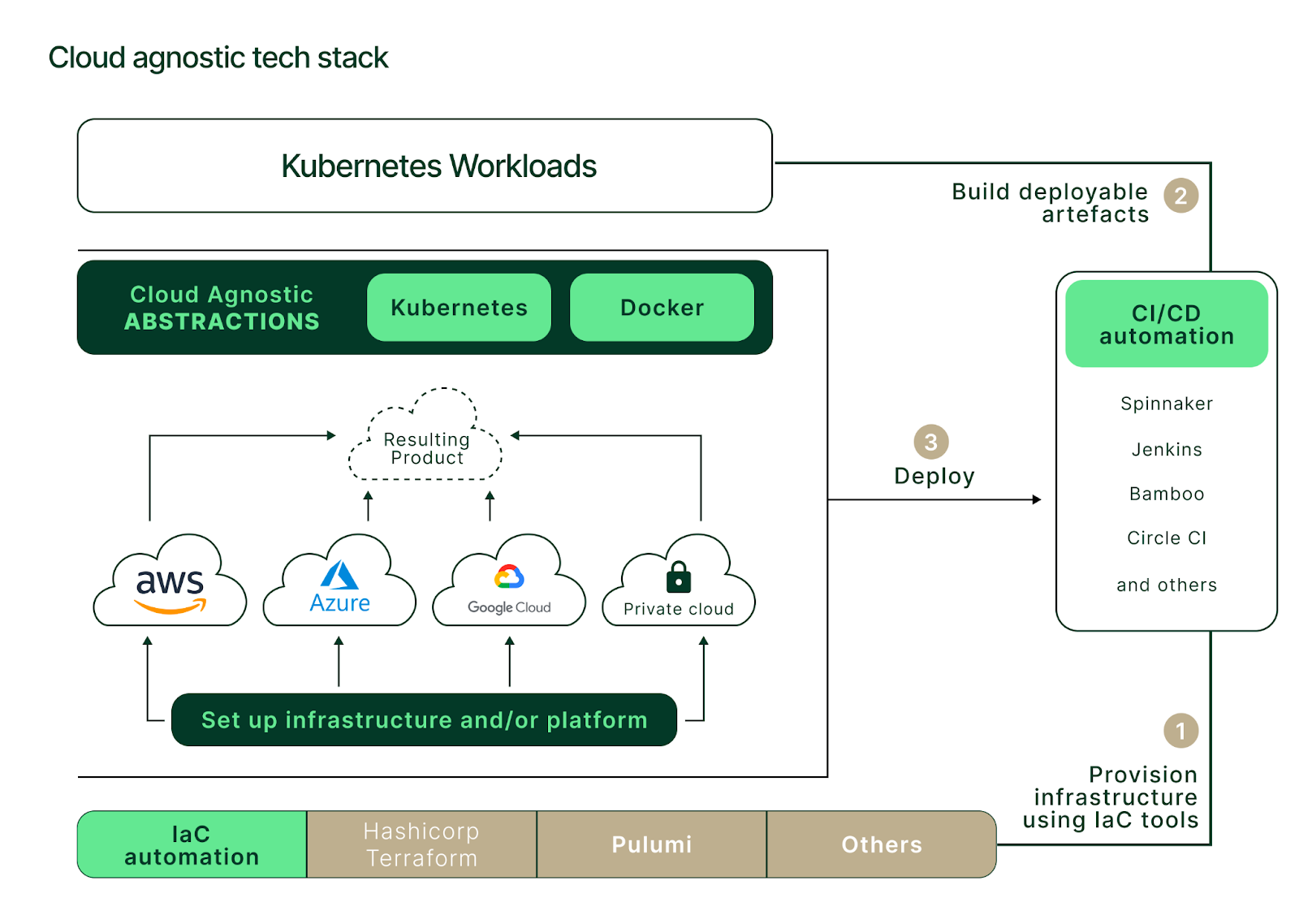

Infrastructure provisioning

Securing cloud resources across single or multiple cloud providers in a predictable and repeatable way is a significant challenge. Infrastructure-as-Code (IaC) tools are helpful when addressing problems like these but can be complicated. Most IaC tools like Hashicorp's Terraform or cloud-specific ones like AWS CloudFormation excel within a single provider but can struggle with multi-cloud.

Instead, solutions like Pulumi come closer to the multi-cloud ideal by allowing engineers to describe infrastructure abstracted from the specifics of individual cloud providers. Regardless of your choice, implementing and managing code for each cloud provider remains necessary.

Orchestration and Kubernetes distributions

Once organizations deploy their resources and Kubernetes clusters, the challenge of managing (or orchestrating) becomes relevant. Orchestration of multi-cloud clusters is not provided out-of-the-box, so you must use a third-party solution.

Third-party orchestration solutions often come with custom Kubernetes distributions that implement or alter container runtimes, storage, and networking layers. Some of these orchestration solutions and distributions are tooled for multi-cloud environments (e.g., Rancher, Red Hat OpenShift, or Mirantis Kubernetes Engine). Others come from a single vendor (AWS EKS, GCP GKE, Azure AKS). Each has its benefits and drawbacks, which we will examine in future articles, so watch this space!

Storage and networking

The transient nature of containers can present challenges for application and infrastructure developers—for example, the necessity to persist storage across Kubernetes clusters spread over multiple clouds.

Thanks to Container Storage Interface, solutions like Portworx, Rook, or OpenEBS can provide users with ways to spread the storage across cloud environments. Keep in mind, there are several strict networking and storage performance prerequisites and complex configurations.

Multi-cloud requires Kubernetes resources to have network connectivity across the cloud environments. There are a few ways to extend networks beyond one cloud provider:

- VPN tunneling: involves setting up tunnels between every cloud environment. VPN can create issues for egress traffic on both sides of the tunnel, such as congestion and security concerns (BGP vulnerabilities like prefix hijacking and route leaking)

- Private connectivity: relies on telecom providers and on-premise routing, which involves connecting cloud providers in a hub. It's a costly solution with potential performance issues due to traffic being backhauled via data center infrastructure

- Software-defined networks and virtual routers: virtual devices at the edge of cloud provider networks are probably the optimal solution but add complexity and maintenance overhead

Multi-cloud networking depends on the organization's infrastructure and financial and engineering capabilities. After that, it depends on the requirements of the business itself.

Application deployment

Based on the number of applications and their dependencies, deployment can sometimes become complex and require orchestration. Many approaches can be taken but vary based on your application packaging strategy and existing CI/CD processes.

Approaches can be split into two categories:

- Use existing CI/CD pipelines to deliver applications: allows easy integration into the current development lifecycle. Key drawbacks are scalability and the maintenance toll it adds on engineers

- GitOps: offers continuous deployment of applications to destination clusters. Controlled by source code and operated by the likes of ArgoCD, FluxCD, or Gitlab. The key benefit here is the ability to control everything from one source of truth while automatically keeping the deployments consistent. The main drawback is the need for yet another tool and its integration with existing CI/CD processes, and the diversity of cloud providers

Of course, both of the above suggestions assume your engineering teams are using Helm or Kustomize, have established code and package versioning, and have existing CI/CD processes in place.

Compliance and security

Addressing compliance and security in single-cloud environments can be a difficult task.. Organizations must have authentication and authorization in place, keep up with security vulnerabilities and patches, enforce security policies and harden environments. Multi-cloud environments require even more effort due to the sheer diversity of security and compliance implementations.

Multi-cloud setups can add further complications:

- Authentication and authorization: the necessity to integrate with each cloud provider's authentication model. It's best to have a centralized solution decoupled from the cloud provider for account, role, and policy creation

- Vulnerability patching: the necessity to keep infrastructure updated using the procedures from each provider

- Security policies and environment hardening: enforcing the restriction of unsecured ports or traffic, securing APIs and establishing least privilege. Controls must be defined centrally, translated, and distributed to different cloud platforms

- Multi-cloud storage and networking: the configuration and use of storage may involve encrypting data-at-rest and developing data loss prevention procedures. Secure multi-cloud networking required data encryption, advanced routing solutions, and network policy management

Observability

Observability is an essential part of a multi-cloud platform. The observability stack must be able to consume metrics, logs, events, and outages from different platforms while remaining scalable.

There are open-source (Prometheus, Thanos, Grafana) and enterprise (Datadog, NewRelic, Sematext) solutions that somewhat fit the bill, but engineering teams must still build custom dashboards and alerting mechanisms that meet your business and operational needs.

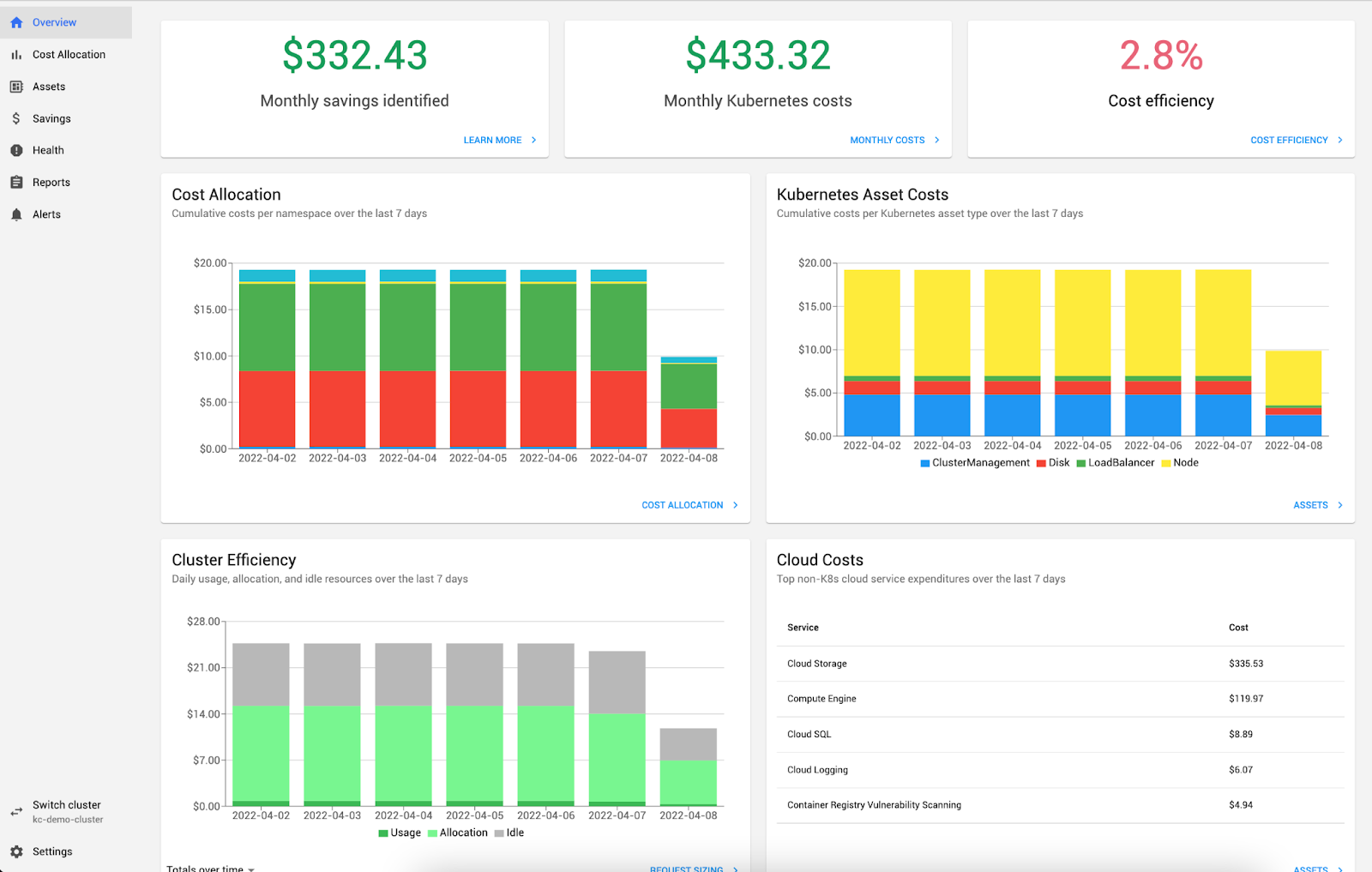

Cost of operations

A crucial topic when implementing multi-cloud systems is budget. Costs can be significantly more than single-cloud topologies, so keeping track of spending is a must.

SaaS solutions like Kubecost can integrate into diverse cloud platforms and consume rich metrics from Kubernetes clusters. Kubecost can then present costs and spending data, segregated per cluster or resource via user-friendly dashboards and reports.

Kubecost is an ideal tool to keep track of overheads and is a must-have for financial stakeholders.