Kubernetes dominates container orchestration. It is celebrated for its ability to scale, resilience, and flexibility. It boasts widespread integration across numerous sectors.

Kubernetes cost optimization is essential to ensure efficient resource usage and budget control. Adopting cost-optimization best practices can lead to substantial savings and enhance operational efficiency.

With adoption and growing environments comes the challenge of cost management. As Kubernetes environments expand to meet the growing demands of applications and services, they raise complex resource management issues. This growth often leads to inefficient resource utilization, which can quickly escalate costs and strain budgets.

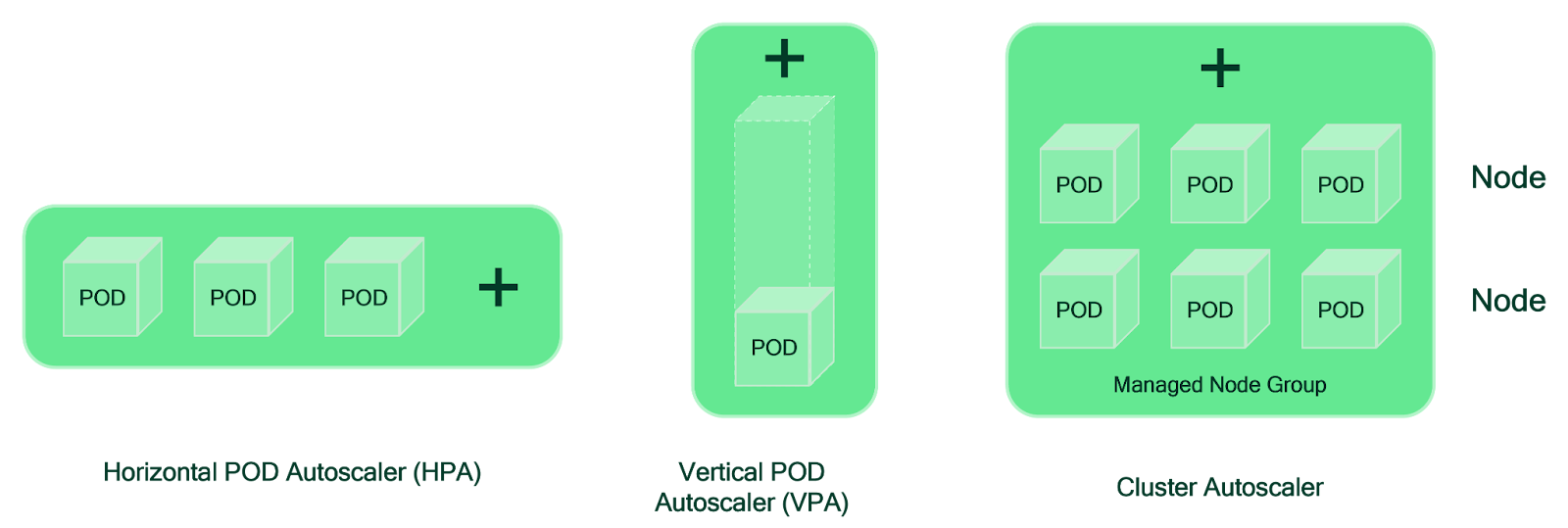

This article delves deep into Kubernetes cost optimization, shedding light on the challenges and complexities of scaling and managing Kubernetes clusters. We explore the best practices for effective cost management, from implementing auto-scaling to optimizing resource allocation and employing financial governance mechanisms.

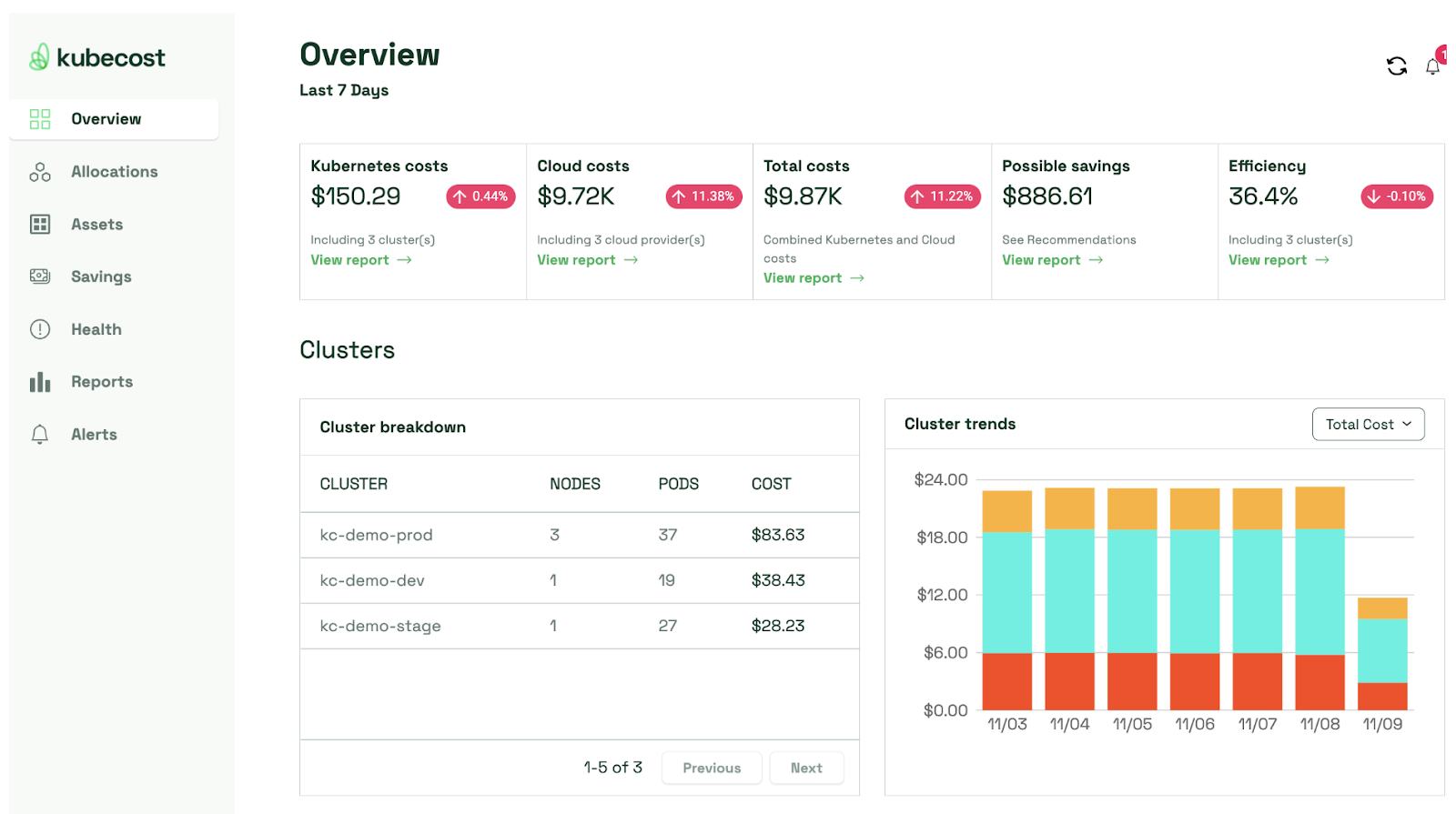

Along the way, we’ll see how third-party platforms, like Kubecost, can take cost optimization to the next level. By embracing the strategies described below, organizations can navigate the financial intricacies of Kubernetes, ensuring a balanced approach between performance and cost and paving the way for sustainable growth in the cloud-native landscape.