Adopting best practices when using Grafana Loki ensures optimal log management, performance, and cost-effectiveness in your logging infrastructure. Whether you're deploying Loki in a small-scale environment or at scale in production, these guidelines can help you get the most out of Loki.

Use structured logging

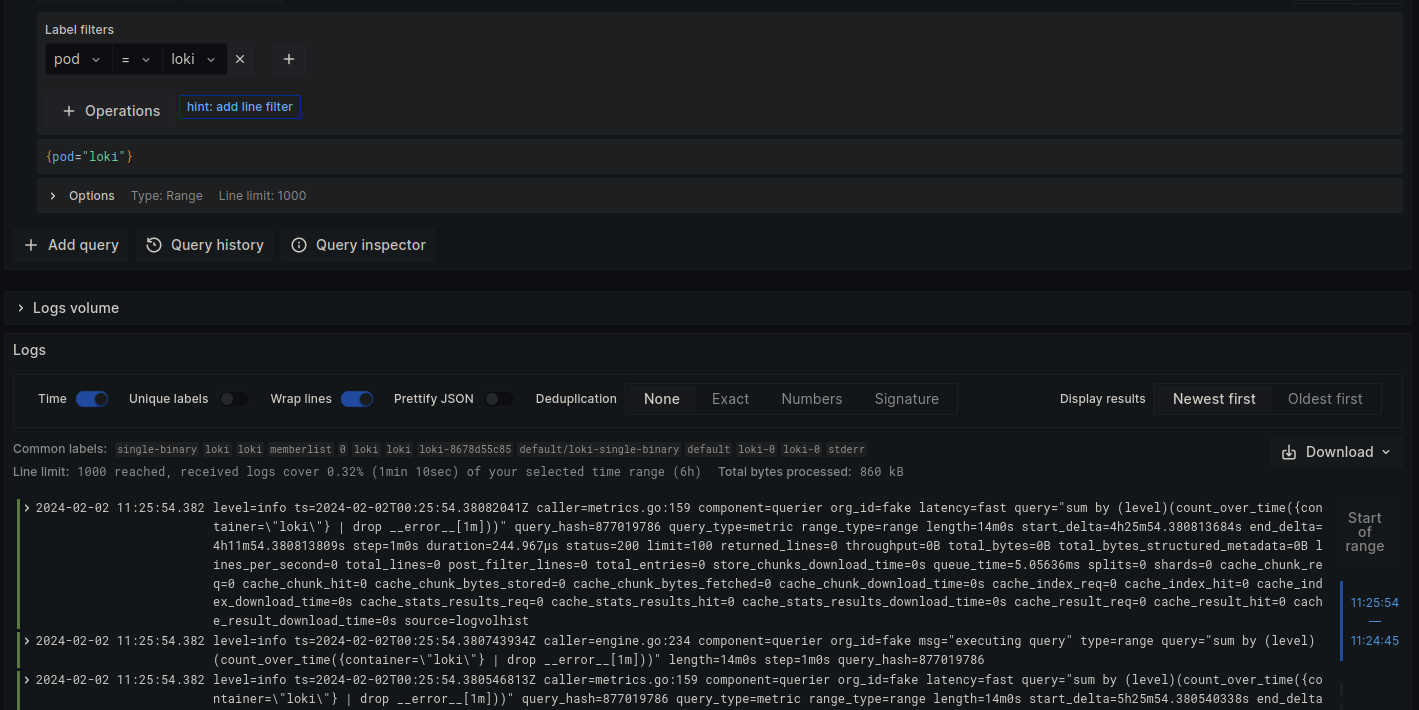

Implementing structured logs when using Grafana Loki is helpful for several reasons. When log messages are consistently formatted, applying label metadata to log streams is easier. Query filtering can be done with JSON parsing, and log format consistency across services enables easier log processing and transformations, like exporting critical log messages for auditing purposes. Committing all services in a Kubernetes cluster to a consistent log format is better than allowing each service to have its own unique log format, thereby introducing complexity when attempting to query these logs.

Here is an example of a plain-text unstructured log message. Performing log queries on this type of data is computationally expensive because the entire message must be scanned for matches:

2024-02-05 12:00:00 ERROR User login failed for user_id=1234 due to incorrect password

A better approach is to use a JSON structured log format:

{

"timestamp": "2024-02-05 12:00:00",

"level": "ERROR",

"message": "User login failed for user_id=1234 due to incorrect password",

"user_id": 1234,

"error_reason": "incorrect password"

}

The log queries can now filter by specific JSON keys like “error_reason” without performing a full scan on all the text. LogQL performance will be significantly faster by indexing the “error_reason” metadata label, and full-text searches can now be avoided.

Log selectively

Not all log messages will provide value and have relevance for analysis like troubleshooting. A best practice to improve the cost efficiency and performance of Grafana Loki is to avoid unnecessary logging and only keep log output, which may be valuable in the future. Blindly storing all logs generated by an entire Kubernetes cluster will be costly and cause performance penalties for Loki.

Similarly, implement log retention policies to automatically prune stale logs and avoid storing logs indefinitely, except logs that are relevant for long-term archival (such as for auditing purposes). Loki supports configuration fields like “retention_period” to delete old logs automatically. The project also supports implementing multiple retention policies that apply to different log streams, allowing administrators to discard low-value logs while retaining logs that may be required for long-term archival.

Optimize log labels

Loki's performance and storage requirements are closely tied to using metadata labels. Administrators should avoid unnecessarily applying labels and instead carefully curate their use. Indexing the most valuable labels for querying will help maintain performance requirements. A typical log message may only have a subset of fields that contain commonly queried data, which are the fields for which labels will be valuable for indexing. Over-indexing log data with too many labels will reduce the advantage that Loki provides with index-free logging.

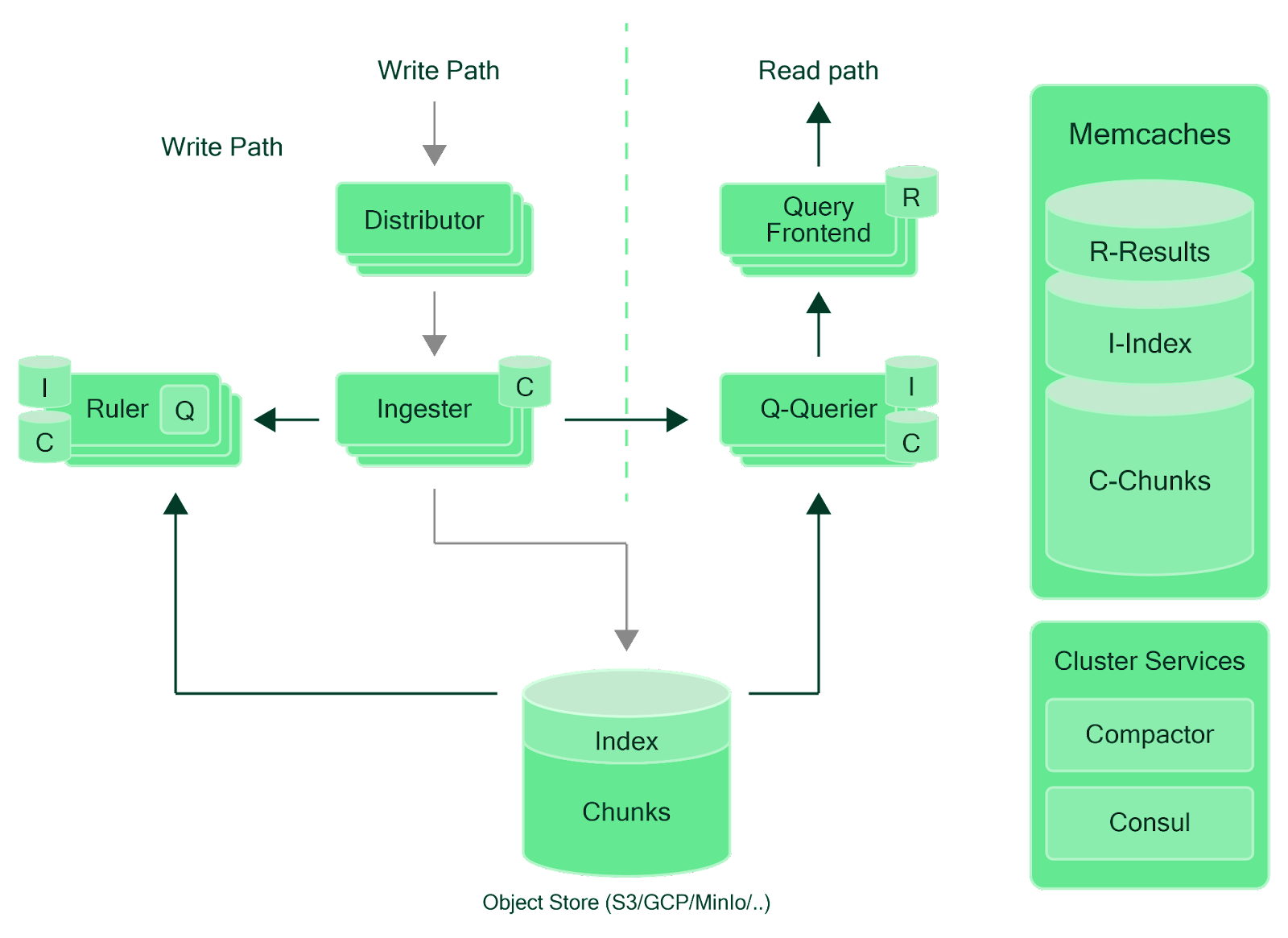

Optimize scaling

Loki exposes dozens of Prometheus metrics for all its components, allowing administrators to gain insight into performance bottlenecks and the sources of various potential issues. Regularly evaluating the metrics will help administrators detect potential resource exhaustion or scaling issues, particularly when Loki handles high log volume.

Loki natively provides automatic scaling features that can be enabled in the Loki Helm chart values.yaml file. Administrators can configure fields related to min/max replicas, target CPU/memory utilization, and scaling sensitivity. These fields provide input into HorizontalPodAutoscaler (HPA) resources that the Loki Helm chart can automatically create based on the provided values.yaml file.

Here is an example snippet of some Loki values available to configure related to autoscaling behavior:

replicas: 3

autoscaling:

enabled: false

minReplicas: 2

maxReplicas: 6

targetCPUUtilizationPercentage: 60

targetMemoryUtilizationPercentage:

behavior:

scaleUp:

policies:

- type: Pods

value: 1

periodSeconds: 900

A full example of a Loki values.yaml file and descriptions of each parameter can be found on GitHub.

Log security

Logs will often contain sensitive information that must be secured. Loki supports multi-tenancy controls, and Grafana supports an RBAC system, which should be leveraged to control user access to log data. The backend storage system (like AWS S3) should also be secured via native security controls like bucket access policies and object encryption. Maintaining the security of log data is a crucial aspect of the overall security posture of a Kubernetes cluster.

Implement a multi-faceted observability setup

Grafana Loki does not provide a full observability solution for Kubernetes clusters. Logging is a critical aspect of observability but must be complemented with other observability data to provide a complete overview of a cluster's operations. Metrics and cost allocation data are required for a comprehensive observability setup.

A multi-faceted approach to observability will require administrators to consider additional tools alongside Loki, such as Prometheus for metrics collection and Kubecost for Kubernetes cost monitoring.

Prometheus provides valuable metrics collection functionality and integrates easily with Grafana dashboards and Loki, while Kubecost extends observability by enabling financial metrics for administrators to gain insight into cluster cost breakdowns and spend optimization opportunities. Ensuring that a Kubernetes cluster has observability implemented from multiple angles ensures that administrators have complete insight into their clusters and can effectively conduct troubleshooting, incident analysis, performance optimization, and cost optimization.